The chatbot ChatGPT has been generating debate since it was unveiled in November 2022.Credit: Shutterstock

Researchers are keen to experiment with using generative AI tools such as the advanced chatbot ChatGPT to help with their work, according to a survey of Nature readers. But they are also concerned about the potential for errors and false information.

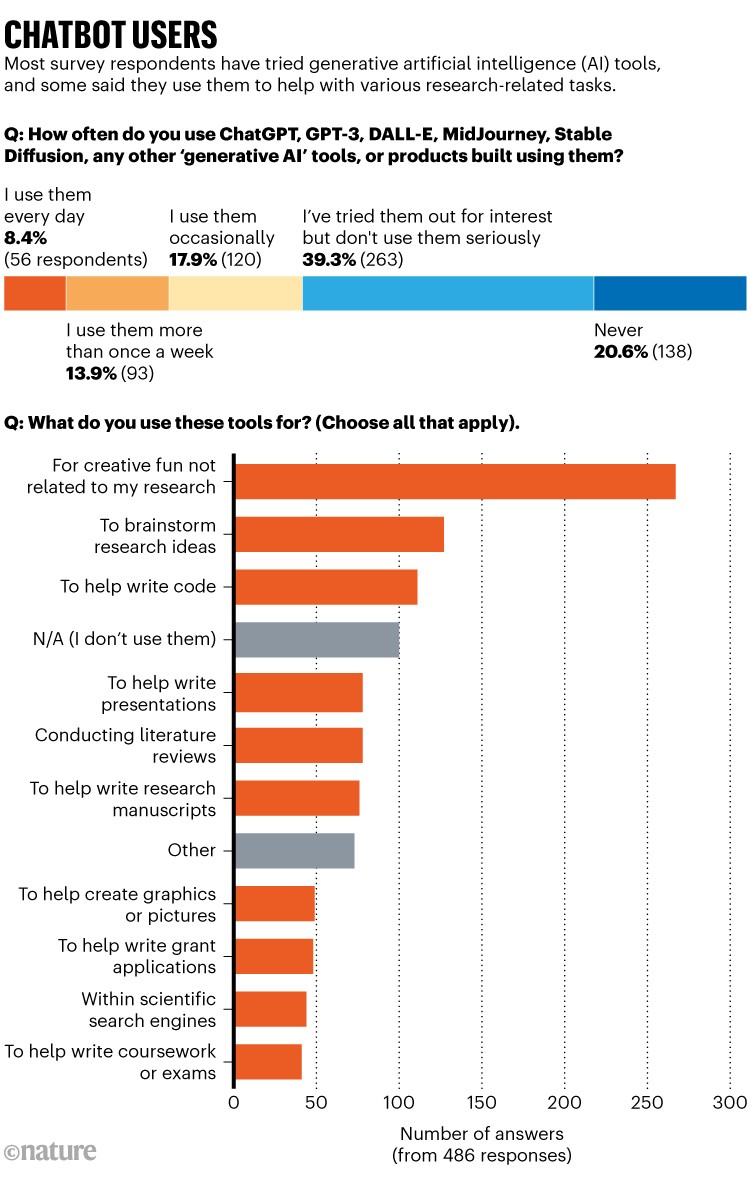

Of 672 readers who responded to an online questionnaire, around 80% have used ChatGPT or a similar AI tool at least once. More than one-fifth use such tools regularly — 8% said they use them every day, and 14% several times per week. Around 38% of respondents know of other researchers who use the tools for research or teaching (see ‘Chatbot users’).

A considerable proportion of respondents — 57% — said they use ChatGPT or similar tools for “creative fun not related to research”. Among the uses related to science, brainstorming research ideas was the most common, with 27% of respondents indicating they had tried it. Almost 24% of respondents said they use generative AI tools for writing computer code, and around 16% each said they use the tools to help write research manuscripts, produce presentations or conduct literature reviews. Just 10% said they use them to help write grant applications and 10% to generate graphics and pictures. (These numbers are based on a subset of around 500 responses; a technical error in the poll initially prevented people from selecting more than one option.)

Survey participants shared their thoughts on the potential of generative AI, and concerns about its use, through open-ended answers. Some predicted that the tools would have the biggest beneficial impacts on research by helping with tasks that can be boring, onerous or repetitive, such as crunching numbers or analysing large data sets; writing and debugging code; and conducting literature searches. “It’s a good tool to do the basics so you can concentrate on ‘higher thinking’ or customization of the AI-created content,” says Jessica Niewint-Gori, a researcher at INDIRE, the Italian ministry of education’s institute for educational research and innovation in Florence.

Some hoped that AI could speed up and streamline writing tasks, by providing a quick initial framework that could be edited into a more detailed final version.

“Generative language models are really useful for people like me, for whom English isn’t their first language. It helps me write a lot more fluently and quicker than ever before. It’s like having a professional language editor by my side while writing a paper,” says Dhiliphan Madhav, a biologist at the Central Leather Research Institute in Chennai, India.

Fictitious literature

But the optimism was balanced by concerns about the reliability of the tools, and the possibility of misuse. Many respondents were worried about the potential for errors or bias in the results provided by AI. “ChatGPT once created a completely fictitious literature list for me,” says Sanas Mir-Bashiri, a molecular biologist at the Ludwig Maximilian University of Munich in Germany. “None of the publications actually existed. I think it is very misleading.”

Others were concerned that such tools could be used dishonestly, by cheating on assignments, for example, or by generating plausible-sounding scientific disinformation. The idea that AI could be used by ‘paper mills’ to produce fake scientific publications was mentioned frequently, as was the possibility that over-reliance on AI for writing tasks could impede researchers’ creativity and stunt the learning process.

The key, many agreed, is to see AI as tool to help with work, rather than to replace work altogether. “AI can be a useful tool. However, it has to remain one of the tools. Its limitations and defects have always to be clearly kept in mind and governed,” says Maria Grazia Lampugnani, a retired biologist from Milan, Italy.

doi: https://doi.org/10.1038/d41586-023-00500-8

Article source:https://www.nature.com/articles/d41586-023-00500-8